How to use Gstreamer AppSink in Python

5 min. read |

The most convenient way to integrate video streaming pipeline into your application is through Gstreamer’s appsink plugin. In the following post we’ll explore Python GObject API on how to receive video frames from gstreamer pipeline in Python.

Requirements

- Ubuntu 18

- Python 3.6

- Gstreamer with Python Bindings. Look at “How to install Gstreamer Python Bindings on Ubuntu 18”.

- gstreamer-python

Note: gstreamer-python hides a lot of code under-the-hood so developer can reuse it in own apps and focus more on video analytics. For example, recall from previous post How to launch Gstreamer pipeline in Python. There we have to handle explicitly GObject.MainLoop and Gst.parse_launch. With gstreamer-python we simply use GstContext and GstPipeline instead.

Code

Learn how to?

- use appsink in pipeline

- extract raw bytes from Gst.Buffer

- work with Gst.Sample

- extract image resolution from Gst.Structure (Gst.Caps) and GstVideo.VideoFormat

- setup GstApp.AppSink to receive buffers

Why use AppSink instead of writing Plugin to access buffers from pipeline?

Recall from “How to write Gstreamer plugin with Python“. There we implemented Blur Filter as a Gstreamer Plugin. In such a case we can access gstreamer buffer inside plugin’s chain() or transform() method. So, let’s have a look on the following table and check when AppSink is suitable:

| Approach | Pros | Cons | Conclusion |

|---|---|---|---|

| AppSink | 1. Simple to implement; 2. Ability to batch processing | 1. Buffer duplication | – use for prototypes (pros 1) – gives more flexibility, less performance (cons 1) |

| Filter Plugin | 1. Accessing/editing buffer in-place | 1. Require prior gstreamer knowledge; 2. Ability to batch processing only with proper Mixer plugin 3. Gstreamer Python Bindings have some limitations (ex.: working with metadata) | – use for well-defined pipelines (cons 1) – gives less flexibility (cons 3), but more performance (pros 1) |

Note:

- OpenCV uses approach with appsink/appsrc to pop/push frame buffer from/into gstreamer pipeline

- Most video-analytics frameworks uses plugins to integrate deep learning models into gstreamer pipeline

Guide

Define Gstreamer Pipeline

For example, we are going to take simple pipeline:

- videotestsrc generates buffers with various video formats and patterns.

- capsfilter is used to specify desired video format.

- appsink with <emit-signals> property enabled. To receive buffers from pipeline in our application.

gst-launch-1.0 videotestsrc num-buffers=100 ! \

capsfilter caps=video/x-raw,format=RGB,width=640,height=480 ! \

appsink emit-signals=TrueNote: For any gstreamer pipeline, replace other sink element with appsink instead to be able to receive buffers in your application.

Write Python Script

Import gstreamer into python script

from gstreamer import GstContext, GstPipelineFirst, initialize GstContext (aka GObject.MainLoop routine)

with GstContext():Then, create GstPipeline (aka Gst.parse_launch)

command = "videotestsrc num-buffers=100 ! \

capsfilter caps=video/x-raw,format=RGB,width=640,height=480 ! \

appsink emit-signals=True"

with GstPipeline(command) as pipeline:Subscribe to <new-sample> event, that raises by appsink element.

appsink = pipeline.get_by_cls(GstApp.AppSink).pop(0)

appsink.connect("new-sample", on_buffer, None)Implement callback function to handle <new-sample> event. Callback’s arguments are appsink element itself and user data. Callback returns Gst.FlowReturn.

def on_buffer(sink: GstApp.AppSink, data: typ.Any) -> Gst.FlowReturn:Appsink lets user to receive gstreamer sample (Gst.Sample, wrapper on Gst.Buffer with additional info). For this purpose just emit <pull-sample> with appsink.

sample = sink.emit("pull-sample") # Gst.SampleNow, let extract Gst.Buffer from Gst.Sample

buffer = sample.get_buffer() # Gst.BufferAlso, from gstreamer sample it is possible to extract additional information about video frame, for example, resolution.

caps_format = sample.get_caps().get_structure(0) # Gst.Structure

# GstVideo.VideoFormat

frmt_str = caps_format.get_value('format')

video_format = GstVideo.VideoFormat.from_string(frmt_str)

w, h = caps_format.get_value('width'), caps_format.get_value('height')

c = utils.get_num_channels(video_format)Note: import utils from gstreamer-python package to receive number of channels by specific format

import gstreamer.utils as utilsKnowing the video frame resolution we can easily to convert Gst.Buffer to numpy array, so we can feed array further to video analytics pipeline.

array = np.ndarray(shape=(h, w, c), \

buffer=buffer.extract_dup(0, buffer.get_size()), \

dtype=utils.get_np_dtype(video_format))

# remove single dimensions (ex.: grayscale image)

array = np.squeeze(array) Note: utils.get_np_dtype implemented in gstreamer-python to get proper np.dtype for any video format.

Place <while loop> to wait until pipeline processing end.

while not pipeline.is_done:

time.sleep(.1)Run example

First, clone and install gst-python-tutorials project on your PC

git clone https://github.com/jackersson/gst-python-tutorials.git

cd gst-python-tutorials

python3 -m venv venv

source venv/bin/activate

pip install --upgrade wheel pip setuptools

pip install --upgrade --requirement requirements.txtNow, everything is ready to run test command

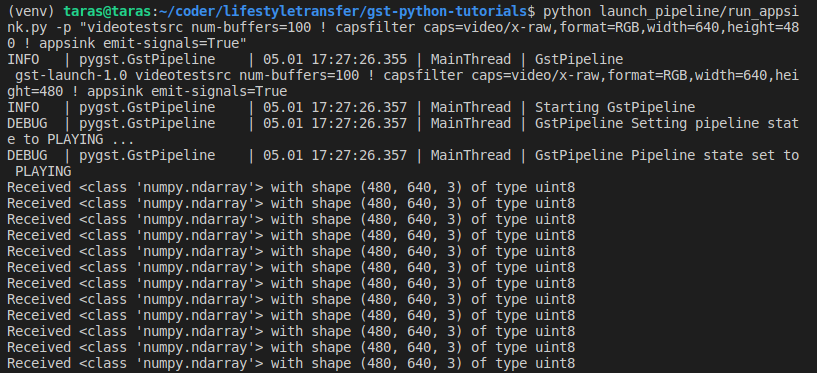

python launch_pipeline/run_appsink.py -p \

"videotestsrc num-buffers=100 ! \

capsfilter caps=video/x-raw,format=RGB,width=640,height=480 ! \

appsink emit-signals=True"You should receive similar output

You can easily play with -p and specify any pipeline you want (resolution, format, plugins, …). Checkout awesome gstreamer pipelines.

Hope everything works as expected 🙂 . In case of any bugs, errors, suggestions – leave comments.

Conclusion

Gstreamer Appsink is simple way to receive video frames from pipelines of any complexity and forward them to further video processing pipeline in Python.

Hey! I’m trying to use your tutorial to receive stream from Raspberry Pi.

On Rpi I have raspivid sending MJPEG via UDP.

On PC side I’ve tried to launch your code from github without any modification, but python shows an error that

return [e for e in self._pipeline.iterate_elements() if isinstance(e, cls)]

TypeError: ‘Iterator’ object is not iterable.

It means, that there is no data in pipeline?

What do you think with RPI concept? Is is good idea to transfer data like this?

Best regards

Hi Julia,

**********

Thank you for your question.

Can you specify version of Gstreamer and Ubuntu?

gst-inspect-1.0 –version

lsb_release -a

**********

I faced similar problem when gstreamer-python package was installed with some errors. You can check it while installing with pip, specifying -v flag:

**********

pip install -U git+https://github.com/jackersson/gstreamer-python.git#egg=gstreamer-python -v**********

For example, my output (marked with ‘—>‘ places to pay attention)

**********

Tried it both on my PC and RPi, everything worked perfect. Also you can use prepared Docker container

**********

The main purpose of gstreamer-python installation is to update old Gst.py file (reason of your error) with new one.

*******

> What do you think with RPI concept? Is is good idea to transfer data like this?

Also, RPi is a good choice to stream low resolution video. Make sure you are using hardware-accelerated encoding to MJPEG (ex: omxmjpegenc).

Best regards,