How to implement Video Crop Gstreamer plugin? (Caps negotiation)

10 min. read |

In this guide is being showed an implementation of video crop gstreamer plugin. Presented an approach to implement plugins with different input-output resolution.

Requirements

- Ubuntu 18

- Python 3.6

- Gstreamer with Python Bindings. Look at “How to install Gstreamer Python Bindings on Ubuntu 18”

- gstreamer-python

Code

Learn how to?

- write plugins with different input and output formats.

- discover videocrop plugin from Good Gstreamer plugins.

- implement own videocrop plugins using Python

- learn algorithm of caps negotiation

- use plugins: videotestsrc, xvimagesink, videoconvert

Introduction

Most gstreamer plugins have different input and output formats. For example, format conversion from YUV to RGB with videoconvert. Or video resolution change with videoscale or videocrop. Often for Computer Vision tasks we want to do object detection for specific Region of Interest (ROI) only. This also helps to reduce memory consumption and computational costs.

In Gstreamer, plugins that have different input-output formats implemented using Caps Negotiation technique. Official documentation is quite difficult to understand as for me, so let’s do on example.

Note: Recall that caps (or capabilities) describes different media types. For example, to describe raw, Full HD video in RGB colorspace with 30 frames per second we can use:

"video/x-raw,format=RGB,width=1920,height=1080,framerate=30/1"

Guide

Note: Tutorial is heavily based on audioplot (an official example from gst-python repository)

Define Caps

First, let’s define input and output capabilities (Gst.Caps). Our video crop plugin is going to support all RBG-based colorspaces and GRAY-scale:

RGB = "RGBx,xRGB,BGRx,xBGR,RGBA,ARGB,BGRA,ABGR,RGB,BGR,RGB16,RGB15"

GRAY = "GRAY8,GRAY16_LE,GRAY16_BE"

FORMATS = [f.strip() for f in (RGB + GRAY).split(',')]

The video format is going to be RAW: video/x-raw

Video resolution capabilities is going to be in range [1, MAXINT]:

range(1, GLib.MAXINT)

In order to feed these parameters as Gst-friendly format use Gst.ValueList and Gst.IntRange:

raw_video = 'video/x-raw' formats = Gst.ValueList(FORMATS) width = Gst.IntRange(range(1, GLib.MAXINT)) height = Gst.IntRange(range(1, GLib.MAXINT))

Now, let’s put everything together for input caps:

IN_CAPS = Gst.Caps(Gst.Structure(raw_video, format=formats, width=width, height=height)

And for output caps:

OUT_CAPS = Gst.Caps(Gst.Structure(raw_video, format=formats, width=width, height=height)

Note that input and output caps are equal for now. So the filter element built on those caps can handle different formats.

Implement plugin

We are going to extend GstBase.BaseTransform in order to build videocrop plugin. All steps are well-described here: How to write Gstreamer plugin with Python. Let’s start with class definition:

class GstVideoCrop(GstBase.BaseTransform):

- name: gstvideocrop

- description metadata

("Crop", # Name

"Filter/Effect/Video", # Transform

"Crops Video into user-defined region", # Description

"Taras Lishchenko <taras at lifestyletransfer dot com>") # Author

- pads (inputs and outputs).

Gst.PadTemplate.new("sink",

Gst.PadDirection.SINK,

Gst.PadPresence.ALWAYS,

IN_CAPS)

Gst.PadTemplate.new("src",

Gst.PadDirection.SRC,

Gst.PadPresence.ALWAYS,

OUT_CAPS)

Note: Recall that plugins’s sink is input and src is ouput

Define the transform function. For videocrop we are going to use do_transform as we change the dimensions of output buffer. Let leave it empty for now.

def do_transform(self, inbuffer: Gst.Buffer, outbuffer: Gst.Buffer) -> Gst.FlowReturn:

# empty

return Gst.FlowReturn.OK

Put next code into the file end in order to register plugin and to use it from command line:

GObject.type_register(GstVideoCrop)

__gstelementfactory__ = ("gstvideocrop",

Gst.Rank.NONE, GstVideoCrop)

Define properties

Note: Properties are described in the following post “How to write Gstreamer plugin with Python” (Properties Section)

Properties are:

- top

- left

- right

- bottom

Each property interprets num of pixes to skip from specific image side. All properties are of type: int; in range: [-MAXINT, MAXINT]; and default value is 0.

Note: When property is negative the image is going to be padded with black pixels. When property exceeds the image limits the ValueError is going to be raised.

Note: Plugin is very similar to official videocrop plugin. To check it’s properties use:

gst-inspect-1.0 videocrop

Caps negotiation

Note: Caps negotiation for transform element is properly described here

Transform caps

Caps transform is used to find intersection (Gst.Caps.intersect) between input and output capabilities. Take a look on do_transform_caps overrided implementation:

def do_transform_caps(self, direction: Gst.PadDirection, caps: Gst.Caps, filter_: Gst.Caps) -> Gst.Caps:

caps_ = IN_CAPS if direction == Gst.PadDirection.SRC else OUT_CAPS

# intersect caps if there is transform

if filter_:

# create new caps that contains all formats that are common to both

caps_ = caps_.intersect(filter_)

return caps_

Note: Caps intersection explained in the following Colab Notebook

Example:

# IN Caps

video/x-raw, format=(string){ RGB, GRAY8 }, width=(int){ 320, 640, 1280, 1920 }, height=(int){ 240, 480, 720, 1080 }

# OUT Caps

video/x-raw, format=(string){ RGB }, width=(int){ 640, 1280, 1920 }, height=(int){ 480, 720, 1080 }

# Intersection

video/x-raw, format=(string)RGB, width=(int){ 640, 1280, 1920 }, height=(int){ 480, 720, 1080 }

Fixate Caps

Caps from previous step can store ranges. In the following example, format is represented by range of {RGB, GRAY8}, or height – {240, 480, 720, 1080}.

video/x-raw, format=(string){ RGB, GRAY8 }, width=(int){ 320, 640, 1280, 1920 }, height=(int){ 240, 480, 720, 1080 }

Caps are fixed when there is no properties with ranges or lists. For example, execute the following code. Pay attention to caps after fixate() call. There are no ranges:

caps_string = "video/x-raw, format=(string)RGB, width=(int){ 640, 1280, 1920 }, height=(int){ 480, 720, 1080 }"

caps = Gst.Caps.from_string(caps_string)

print(f"Before fixate: {caps}")

caps = caps.fixate()

print(f"After fixate: {caps}")

> Before fixate: video/x-raw, format=(string)RGB, width=(int){ 640, 1280, 1920 }, height=(int){ 480, 720, 1080 }

> After fixate: video/x-raw, format=(string)RGB, width=(int)640, height=(int)480

Note: Caps fixate explained in the following Colab Notebook

Now, override do_fixate_caps(). In the following function we are going to:

- calculate output resolution based on properties: left, right, top, bottom

- fixate caps, to store only output resolution (nearest)

def do_fixate_caps(self, direction: Gst.PadDirection, caps: Gst.Caps, othercaps: Gst.Caps) -> Gst.Caps:

"""

caps: initial caps

othercaps: target caps

"""

if direction == Gst.PadDirection.SRC:

return othercaps.fixate()

else:

# calculate the output width according to specified properties: top, left, bottom, right

in_width, in_height = [caps.get_structure(0).get_value(v) for v in ['width', 'height']]

if (self._left + self._right) > in_width:

raise ValueError("Left and Right Bounds exceed Input Width")

if (self._bottom + self._top) > in_height:

raise ValueError("Top and Bottom Bounds exceed Input Height")

width = in_width - self._left - self._right

height = in_height - self._top - self._bottom

new_format = othercaps.get_structure(0).copy()

new_format.fixate_field_nearest_int("width", width)

new_format.fixate_field_nearest_int("height", height)

new_caps = Gst.Caps.new_empty()

new_caps.append_structure(new_format)

return new_caps.fixate()

Note: fixate_field_nearest_int() is used to fixate caps with the nearest possible value to our target output width and height

Example in the following Colab Notebook

Before fixate: video/x-raw, format=(string)RGB, width=(int){ 640, 1280, 1920 }, height=(int){ 480, 720, 1080 }

fixate_field_nearest_int('width', 1920)

fixate_field_nearest_int('height', 1080)

After fixate: video/x-raw, format=(string)RGB, width=(int)1920, height=(int)1080

Set Caps

And the easiest part is to override do_set_caps(). We use this only to set plugin into passthrough mode when input format is equal to the output.

def do_set_caps(self, incaps: Gst.Caps, outcaps: Gst.Caps) -> bool:

"""

Note: Use do_set_caps only to set plugin in passthrough mode

"""

in_w, in_h = [incaps.get_structure(0).get_value(v) for v in ['width', 'height']]

out_w, out_h = [outcaps.get_structure(0).get_value(v) for v in ['width', 'height']]

# if input_size == output_size set plugin to passthrough mode

if in_h == out_h and in_w == out_w:

self.set_passthrough(True)

return True

Video Crop

The last part is do_transform. As previously we successfully performed caps negotiation, now we can easily perform image crop.

First, let’s convert Gst.Buffer to np.ndarray for input and output data.

in_image = gst_buffer_with_caps_to_ndarray(inbuffer, self.sinkpad.get_current_caps()) out_image = gst_buffer_with_caps_to_ndarray(outbuffer, self.srcpad.get_current_caps())

Define margins to crop/expand for each side:

h, w = in_image.shape[:2] left, top = max(self._left, 0), max(self._top, 0) bottom = h - self._bottom right = w - self._right

Crop image using array slice according to calculated margins previously:

crop = in_image[top:bottom, left:right]

Use cv2.copyMakeBorder to expand image with black pixels to meet output resolution. Finally, copy resulted pixels into output buffer, so it could be passed further in pipeline.

out_image[:] = cv2.copyMakeBorder(crop, top=abs(min(self._top, 0)),

bottom=abs(min(self._bottom, 0)),

left=abs(min(self._left, 0)),

right=abs(min(self._right, 0)),

borderType=cv2.BORDER_CONSTANT,

value=0)

Example

Now, let’s test everything we’ve implemented previously. First, clone repository and prepare the environment:

git clone https://github.com/jackersson/gst-python-plugins.git cd gst-python-plugins python3 -m venv venv source venv/bin/activate pip install -U wheel pip setuptools pip install -r requirements.txt

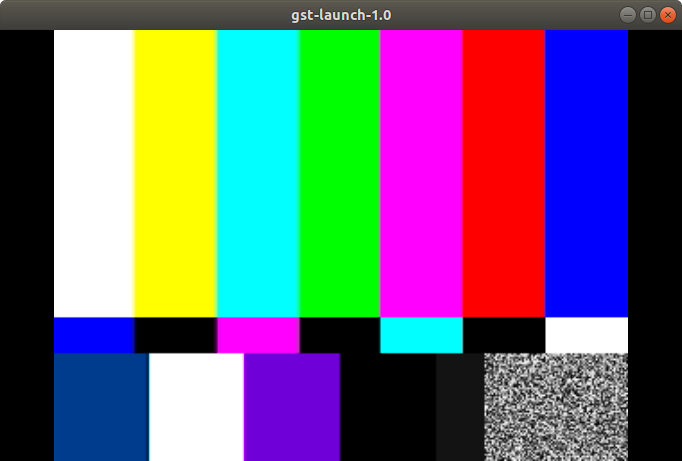

At the beginning let’s display simple pipeline using videotestsrc.

gst-launch-1.0 videotestsrc ! videoconvert ! xvimagesink

Before testing gstvideocrop plugin export the following variables:

export GST_PLUGIN_PATH=$GST_PLUGIN_PATH:$PWD/venv/lib/gstreamer-1.0/:$PWD/gst/

Run pipeline with the next command:

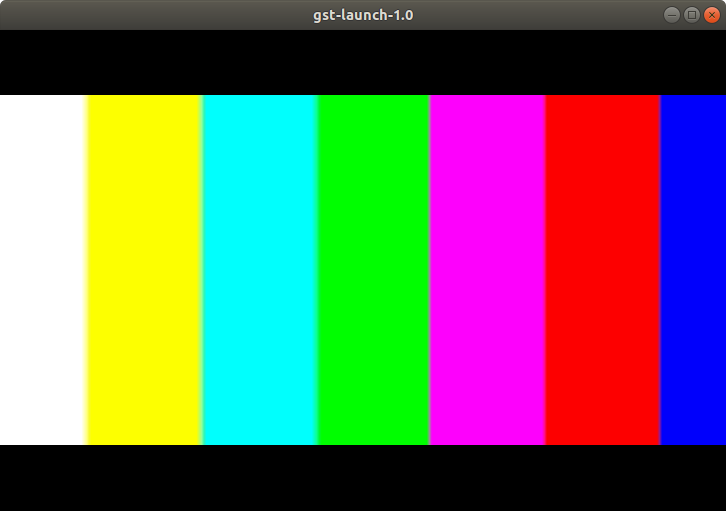

gst-launch-1.0 videotestsrc ! videoconvert ! gstvideocrop left=10 top=20 bottom=80 right=20 ! videoconvert ! xvimagesink

As you can see, now the initial image is cropped from sides.

Conclusion

With the following guide we learnt how to perform caps negotiations in order to implement gstreamer plugin with different input and output format (ex.: videocrop). The main steps to negotiate caps are:

- define

- set

- transform

- fixate

Hope this is going to be helpful for your development 🙂 As always, in case of any suggestions, – leave comments or contact me via social networks or email.

References

- Official audioplot example

- Caps negotiation. Official documentation